<< Back to Project List

Recently, telematic performance has emerged as a necessity. The need for physical isolation and distancing during the pandemic has brought into relief the degree to which creative musical collaboration is reliant on sharing physical space. Musicians employ a variety of explicit and implicit gestural cues to coordinate their actions—a subtle nod of head, an exaggerated rise of the torso, or a fleeting glance can all signal vital information to co-performers. Research has shown that this ability for visual coordination among performers, and visualization of remote performers for audiences, are among the most pressing needs in telematic performance.

The technologies underlying telematic performance currently allow us to achieve “CD quality” audio with sufficiently low latency between locations on the same continent to enable rhythmic music performance. Video, however, is a different story. The very process of encoding a digital video signal can be too slow to enable rhythmic coordination, not even factoring in transmission and decoding time. Even if we could push the limits further with video, there is evidence that video might not actually be the most effective way to support what musicians and audiences need. Research has shown that even at the same scale and information rate, physical movement in space is more engaging than video, and supports more effective interpretation of gestures. Video has limited ability to communicate complex human qualities such as “effort” and “tension” that are essential in music, but which involve extremely subtle and highly nuanced gestures or isometric muscle activations. Finally, video suffers from aesthetic deficiencies. In telematic performance, where we rely on the network to support metaphors of extending or sharing space, video only highlights spatial disjunction. We will always see artifacts of the “other” environment; we’ll never make it “look” like we’re sharing space, even if we can make it sound like it.

The goal of this project is therefore to explore methods of incorporating visual communication of effort, gesture, and movement into telematic performance using kinetic displays and without video transmission.

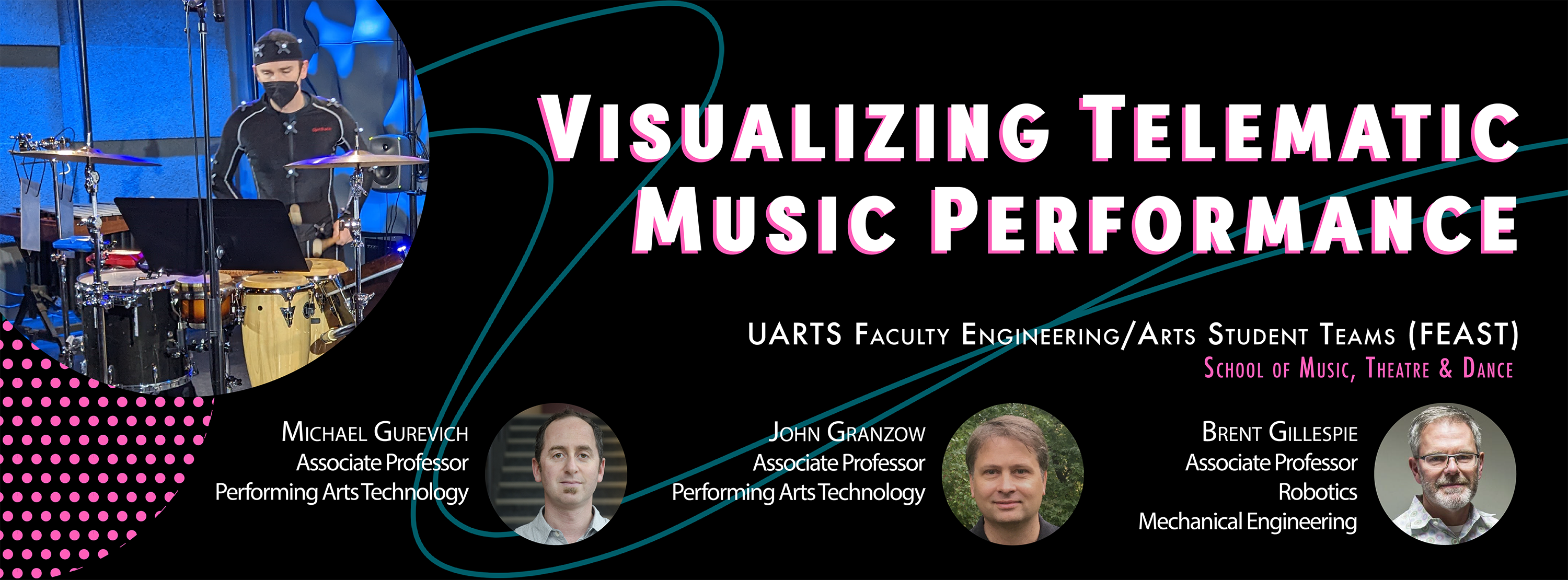

Since the beginning of this project, we have developed a platform of mechatronic displays—non-anthropomorphic kinetic avatars—that display the movements of remote musicians, captured with an infrared camera-based mocap system, in 3-dimensional, physical space.

The current goals of the UARTS Faculty Engineering/Arts Student Team (FEAST) are to:

- refine the design of our mechatronic displays and explore alternatives;

- develop and refine strategies for mapping human movement to a mechatronic display in real-time, including those incorporating machine learning;

- explore the use of haptics and other alternative modes of sensing and communicating movement and effort;

- conduct studies with musicians and audiences to document and describe the experience and performance of the system.

Music Performance (1 Student)

Preferred Skills: College-level performer on a traditional musical instrument, with experience with improvisation and/or chamber music

Likely Majors/Minors: INTPERF, MUSPERF, PAT

Mechatronics/Robotics & Interactive Sensing (1 Student)

Preferred Skills: Actuators, feedback control, simple motion control algorithms, sensors, computer programming; sensors, embedded computing using platforms such as Arduino, analog data capture using data acquisition devices, communication and data transfer between embedded and host computers

Likely Majors/Minors: CE, CS, EE, ME, ROB

Motion Capture/Biomechanics & Nonverbal Communication (1 Student)

Preferred Skills: Marker-based infrared motion capture system such as Qualisys or Vicon, other biomechanics measurement techniques such as electromyography (EMG), force plates, inertial measurement; Theories of nonverbal human communication and coordination using gesture and movement, psychology and physiology of entrainment, kinesics

Likely Majors/Minors: ARTDES, COMM, EE, KINES, ME, PAT, PSYCH, SI

Qualitative Research, Ethnography, HCI (1 Student)

Preferred Skills: qualitative and/or ethnographic research methods such as thematic analysis, focus groups, grounded theory; IRB; analysis of qualitative data; HCI;

Likely Majors/Minors: ARTDES, COMM, CS, PSYCH, SI

Haptics (1 Student)

Preferred Skills: Actuators, force feedback, feedback control, biosensing, computer programming

Likely Majors/Minors: CE, CS, EE, ME, ROB

Machine Learning (1 Student)

Preferred Skills: Practical experience with multiple approaches to machine learning, such as supervised / unsupervised learning, reinforcement learning, dimensionality reduction, neural networks, deep learning; experience with real-time and/or systems

Likely Majors/Minors: CS, CE, EE, ME, ROB

Faculty Project Leads

Michael Gurevich’s highly interdisciplinary research employs quantitative, qualitative, humanistic, and practice-based methods to explore new aesthetic and interactional possibilities that can emerge in performance with real-time computer systems. He is currently Associate Professor in the Departments of Performing Arts Technology and Chamber Music at the University of Michigan’s School of Music, Theatre and Dance, where he teaches courses in physical computing, electronic music performance and the history and aesthetics of media art. Other research areas include network-based music performance, computational acoustic modeling of bioacoustic systems, and electronic music performance practice.

Michael Gurevich’s highly interdisciplinary research employs quantitative, qualitative, humanistic, and practice-based methods to explore new aesthetic and interactional possibilities that can emerge in performance with real-time computer systems. He is currently Associate Professor in the Departments of Performing Arts Technology and Chamber Music at the University of Michigan’s School of Music, Theatre and Dance, where he teaches courses in physical computing, electronic music performance and the history and aesthetics of media art. Other research areas include network-based music performance, computational acoustic modeling of bioacoustic systems, and electronic music performance practice.

His creative practice explores many of the same themes, through experimental compositions involving interactive media, sound installations, and the design of new musical interfaces. His book manuscript in progress is focused on documenting the cultural, technological, and aesthetic contexts for the emergence of computer music in Silicon Valley. An advocate of “research through making,” his creative practice explores many of the same themes, through experimental compositions involving interactive media, sound installations, and the design of new musical interfaces.

Prior to the University of Michigan, Professor Gurevich was a Lecturer at the Sonic Arts Research Centre (SARC) at Queen’s University Belfast, and a research scientist at the Institute for Infocomm Research (I2R) in Singapore. He holds a Bachelor of Music with high distinction in Computer Applications in Music from McGill University, as well as an M.A. and Ph.D. from the Center for Computer Research in Music and Acoustics (CCRMA) at Stanford University, where he also completed a postdoc.

During his Ph.D. and M.A. at Stanford, he developed the first computational acoustic models of whale and dolphin vocalizations, working with Jonathan Berger and Julius Smith as well as collaborators at the Hopkins Marine Station and Stanford Medical School. Concurrent research with Chris Chafe and Bill Verplank investigated networked music performance and haptic music interfaces.

Professor Gurevich is an active author, editor and peer reviewer in the New Interfaces for Musical Expression (NIME), computer music and human-computer interaction (HCI) communities. He was co-organizer and Music Chair for the 2012 NIME conference in Ann Arbor and is Vice-President for Membership of the International Computer Music Association. He has published in leading journals and has presented at conferences and workshops around the world.

John Granzow applies the latest manufacturing methods to both scientific and musical instrument design. After completing a masters of science in psychoacoustics, he attended Stanford University for his PhD in computer-based music theory and acoustics. Granzow started and instructed the 3d Printing for Acoustics workshop at the Centre for Computer Research in Music and Acoustics. He attended residencies at the Banff Centre and the Cité Internationale des Arts in Paris. His research focuses on computer-aided design, analysis, and fabrication for new musical interfaces with embedded electronics. He also leverages these tools to investigate acoustics and music perception.

John Granzow applies the latest manufacturing methods to both scientific and musical instrument design. After completing a masters of science in psychoacoustics, he attended Stanford University for his PhD in computer-based music theory and acoustics. Granzow started and instructed the 3d Printing for Acoustics workshop at the Centre for Computer Research in Music and Acoustics. He attended residencies at the Banff Centre and the Cité Internationale des Arts in Paris. His research focuses on computer-aided design, analysis, and fabrication for new musical interfaces with embedded electronics. He also leverages these tools to investigate acoustics and music perception.

Granzow’s instruments include a long-wire installation for Pauline Oliveros, sonified easels for a large-scale installation at La Condition des Soies in Lyon, France, and a hybrid gramophone commissioned by the San Francisco Contemporary Music Players. He is a member of the Acoustics Society of America where he frequently presents his findings. In 2013, Granzow was awarded best paper for his work modeling the vocal tract as it couples to free reeds in musical performance.

John Granzow also currently serves as the ArtsEngine Faculty Director.

Brent Gillespie received his undergraduate degree in Mechanical Engineering from the University of California, Davis, M.S. and PhD from Stanford University. At Stanford he was associated both with the Center for Computer Research in Music and Acoustics (CCRMA) and the Dextrous Manipulation Laboratory. After his PhD, he spent three years as a postdoc at Northwestern University working in the Laboratory for Intelligent Machines (LIMS). Currently, he holds the position of Professor in the Department of Mechanical Engineering at the University of Michigan in Ann Arbor.

Brent Gillespie received his undergraduate degree in Mechanical Engineering from the University of California, Davis, M.S. and PhD from Stanford University. At Stanford he was associated both with the Center for Computer Research in Music and Acoustics (CCRMA) and the Dextrous Manipulation Laboratory. After his PhD, he spent three years as a postdoc at Northwestern University working in the Laboratory for Intelligent Machines (LIMS). Currently, he holds the position of Professor in the Department of Mechanical Engineering at the University of Michigan in Ann Arbor.

Likely Majors/Minors: ARTDES, CE, COMM, CS, EE, KINES, ME, PAT, PSYCH, SI, ROB

Meeting Details: TBD

Application: Consider including a link to your portfolio or other websites in the personal statement portion of your application to share work you would like considered as part of your submission.

Summer Opportunity: Summer research fellowships may be available for qualifying students.

Citizenship Requirements: This project is open to all students on campus.

IP/NDA: Students who successfully match to this project team will be required to sign an Intellectual Property (IP) Agreement prior to participation.

Course Substitutions: CoE Honors