UARTS FEAST

faculty engineering/arts student teams

The goal of this project is to develop a new genre of inclusive augmented reality games and room-sized interactive systems that remove physical and social barriers to play. The project addresses the unmet need of players with different mobility abilities to play and exercise together in spaces such as school gymnasiums, community centers, and family entertainment centers.

Designers of digital musical instruments have worked to make them accessible to people with cognitive or motor disabilities. The TAMIE project advances this by allowing users to train their own instruments, using machine learning to map their movements to musical output, emphasizing consistent gesture recognition for effective interaction.

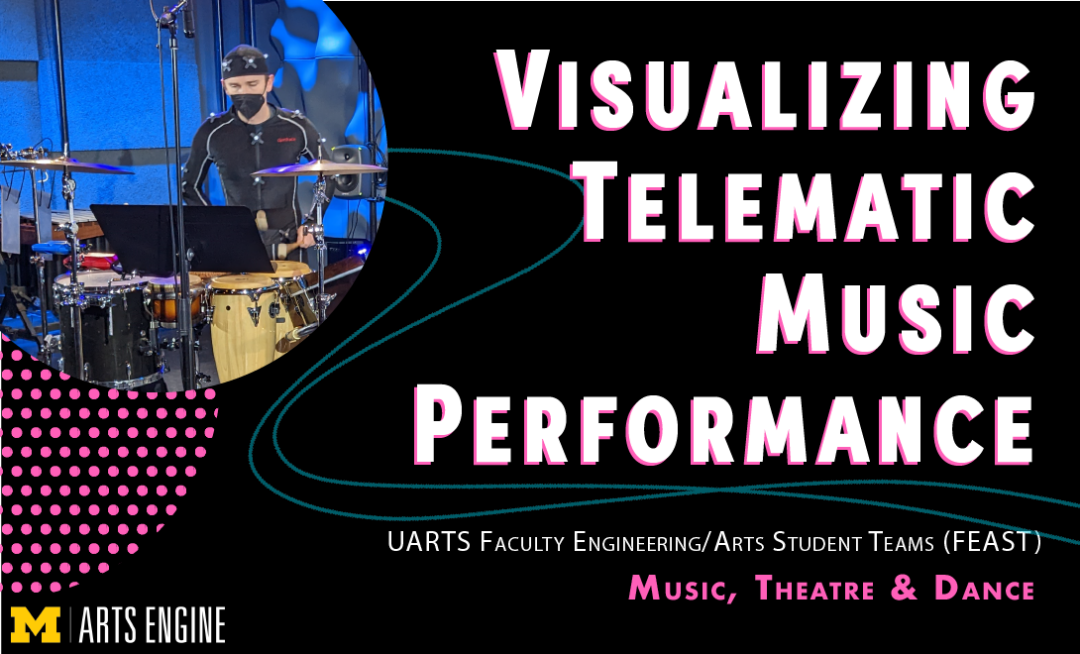

The goal of this project is to explore methods of incorporating visual communication of effort, gesture, and movement into telematic performance without video transmission. Practical experiments with different sensing techniques, including infrared motion capture, inertial measurement, electromyography, and force sensing will be coupled with novel digitally fabricated mechatronic displays.

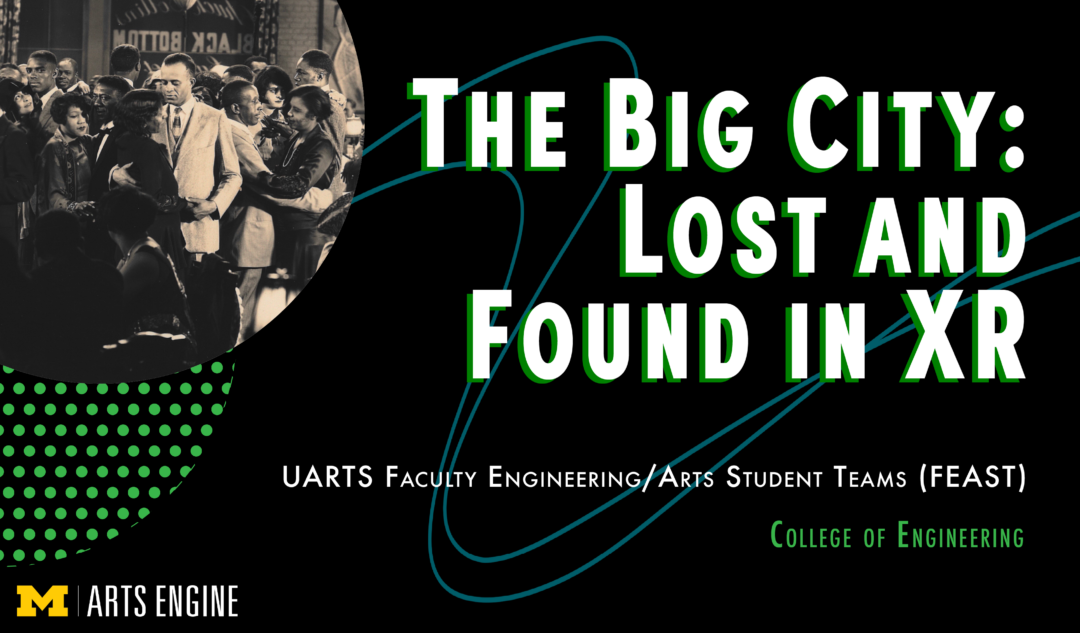

No copies are known to exist of 1928 lost film THE BIG CITY, only still photographs, a cutting continuity, and a detailed scenario of the film. Using Unreal Engine, detailed 3D model renderings, and live performance, students will take users back in time into the fictional Harlem Black Bottom cabaret and clubs shown in the film.

This project proposes a new class of eye imaging device for children featuring an embodied robot character. We seek to transform the sterile, cold, and clinical pediatric eye imaging process into an engaging activity that instead invites children into an interactive partnership. This robotic eye imaging character may enable routine eye examination in young patient populations that will substantially improve the standard of care in pediatric ophthalmology.

This team integrates LiDAR (Light Detection and Ranging) and photogrammetry, 3D point-cloud data captured from artifacts, buildings, urban environments, and landscapes that are animated through the gaming platform and advanced 3D creation tool, Unreal Engine, to develop empathic, inclusive spatial narratives through immersive interfaces.